Building an AI-powered video dubber with Transloadit

At Transloadit, our AI Robots can leverage the API of either Google Cloud or AWS to deliver powerful AI without the headache associated with setting it up. There's no need to train a model, and with both of these backend APIs constantly evolving, you can utilize each system's improved benefits without any need to tap in.

On top of that, Transloadit abstracts their interfaces so switching providers is as easy as changing one paramater.

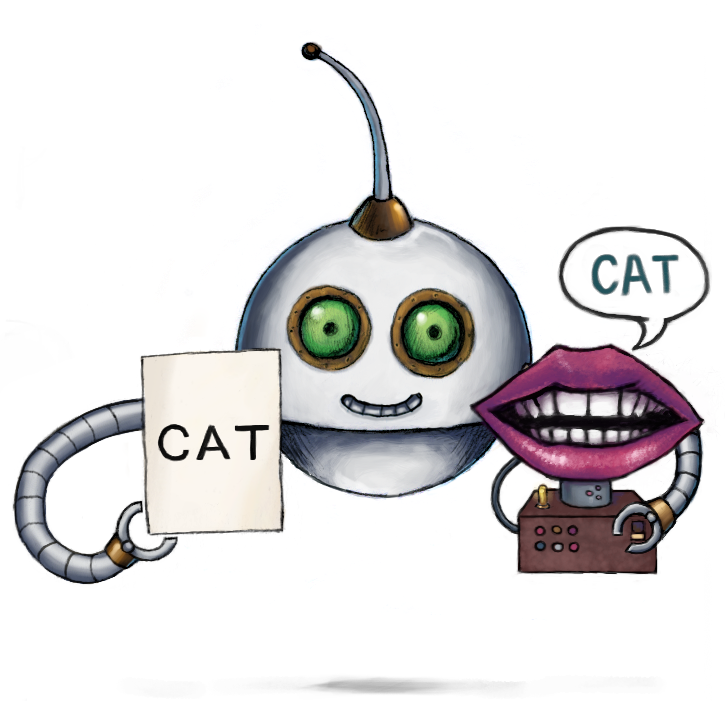

Since we've released not one but two new AI Robots (the /text/speak Robot and the /text/translate Robot), I thought it would be interesting to combine the two into a single project by creating an AI-powered video dubber. Throughout this blog, we'll look at how to use various Robots to create a powerful localizing setup tied together with some Python 🐍

Setup

Naturally, the first step is to create our project folder containing a Python file and our input

video. Then run pip install pytransloadit inside your console to gain access to

Transloadit's Python SDK.

Within our Python file, we need to import the Transloadit SDK as well as some tooling to handle JSON and HTTP requests:

import json

import urllib.request

from transloadit import client

Now declare the source and target language variables. For other languages codes visit the Robot documentation here. Then initialize the Transloadit client. Make sure to replace your Transloadit key and secret values.

source_language = 'en-GB'

target_language = 'de-DE'

tl = client.Transloadit(TRANSLOADIT_KEY, TRANSLOADIT_SECRET)

Generating subtitles

Before we can go any further, it's time to create our Template. Head to your Transloadit console and copy the JSON listed below into a new Template, making sure to take note of the Template ID. This Template's first Step takes our input video, generates subtitles in the language we specify, then outputs its result as a JSON that can be parsed and processed later. Then, in our next Step, using the same input file, we encode our video to reference the URL in the following Template.

{

"steps": {

"transcribe_json": {

"use": ":original",

"robot": "/speech/transcribe",

"provider": "gcp",

"source_language": "${fields.language}",

"format": "json",

"result": true

},

"encode": {

"use": ":original",

"robot": "/video/encode",

"ffmpeg_stack": "v6.0.0",

"result": true,

"preset": "ipad-high",

"turbo": true

}

}

}

Back in our Python program, we call the Template like so:

subtitle_assembly = tl.new_assembly({'template_id': 'FIRST_TEMPLATE_ID', 'fields': {'language': source_language}})

subtitle_assembly.add_file(open('assets/crab.mp4', 'rb'))

subtitle_assembly_response = subtitle_assembly.create(retries=5, wait=True)

Processing the subtitles

The Template we created in the previous section should have provided you with a JSON file that looks something like this:

{

"text": "but the crab feeding grounds are still a long way off\nplay must press on",

"words": [

{

"startTime": 5.2,

"endTime": 8.5,

"text": "but"

},

{

"startTime": 8.5,

"endTime": 8.6,

"text": "the"

}

...

Using

SSML(GCP/AWS),

we can leverage the startTime and endTime properties to preserve the original voiceover timing.

Using some basic conditional logic, we can check a few things:

- If the current word we're looking at is the first word, wait for the same amount of time as the

endTime - If the current word is not the first word, wait for the difference between the

startTimeof this word, and theendTimeof the previous word - If the amount of time we're waiting for is greater than 0, and the current word is not the first

word, then wait for the difference between the

endTimeof this word and the previous word - If the current word is not the last word, then add a space at the end, as this prevents translation issues

To demonstrate the logic just described, I have implemented it inside the Python code shared below.

Note that it also includes a few vital steps for setting up the next portion of our program. Also

make sure that you create a words folder in the same directory as the Python file order for this

to work.

subtitles_url = subtitle_assembly_response.data.get("results").get("transcribe_json")[0].get("ssl_url")

with urllib.request.urlopen(subtitles_url) as url:

data = json.loads(url.read().decode())

ffmpeg = "volume=enable:volume=1"

startTimes = []

endTimes = []

f = open("words/text.txt", "w")

f.write("<speak>")

for x in range(len(data['words'])):

text = data['words'][x]['text']

pause = 0

if x == 0:

pause = data['words'][x]['endTime']

else:

pause = data['words'][x]['startTime'] - data['words'][x - 1]['endTime']

if pause > 0:

if x != 0:

pause = data['words'][x]['endTime'] - data['words'][x-1]['endTime']

f.write("<break time='{pause}s'/> {text}".format(pause=pause, text=text))

else:

f.write("{text}".format(text=text))

if x != len(data['words']) - 1:

f.write(" ")

startTimes.append(data['words'][x]['endTime'])

endTimes.append(data['words'][x]['endTime'] + 0.5)

f.write("</speak>")

f.close()

for x in range(len(startTimes)):

ffmpeg += ", volume=enable='between(t,{start},{end})':volume=0.2".format(start=startTimes[x], end=endTimes[x])

Let's quickly break down the Python code above. First, we store the JSON URL we generated in the

previous Step in subtitles_url, then decode the JSON itself into a local variable.

We then declare the ffmpeg variable, which stores the parameters we will be using in our

Template. This variable needs to be initialized with "volume=enable:volume=1" to make

it easier to append new values to the command.

Next, we run through the logic we established earlier, finally adding a <break> tag where the

pause is greater than 0, causing the text-to-speech (TTS) to wait for a specified amount of time.

We then add an FFmpeg command to our ffmpeg variable that will muffle the original audio during

the sections where the TTS is speaking. You can, of course, improve upon this implementation. Do

note, however, that the command will lower the volume of the original audio stream for 0.5 seconds

for every word. This may cause issues with longer videos that contain more words for translation,

due to the high number of FFmpeg commands. For another demo on how to use FFmpeg commands to

manipulate audio, consider checking out our

"Remove silence from an audio file"

demo.

If everything runs successfully, we will be left with a text file containing the following data:

<speak><break time='8.5s'/> but the crab feeding grounds are still a long way off <break time='7.199999999999999s'/> play must press on</speak>

Merging everything

The following Template will be long, so let's take it piece by piece. Go ahead back to your Transloadit console and create the following Template, again making sure to note your Template ID.

Firstly, we need to import the video file from the URL provided by the last Template.

{

"steps": {

"import_video": {

"robot": "/http/import",

"url": "${fields.video}",

"result": true

},

...

}

}

Then we translate the uploaded text file to our target language using the /text/translate Robot.

{

"steps": {

...

"translate": {

"use": ":original",

"robot": "/text/translate",

"result": true,

"provider": "aws",

"target_language": "${fields.target_language}",

"source_language": "${fields.source_language}"

},

...

}

}

To produce the narration, we use the /text/speak Robot

to enable the ssml parameter.

{

"steps": {

...

"tts": {

"robot": "/text/speak",

"use": "translate",

"provider": "aws",

"target_language": "${fields.target_language}",

"ssml": true

},

...

}

}

Next, we need to extract the audio from the original video, then combine that with our narration.

I've found that setting the volume parameter to "sum" produces the most clearly audible results,

but feel free to tinker to your liking. We also combine this with the ffmpeg parameter to use the

custom FFmpeg filter that we set up earlier.

{

"steps": {

...

"extract_audio": {

"use": "import_video",

"robot": "/video/encode",

"result": true,

"preset": "mp3",

"ffmpeg": {

"af": "${fields.ffmpeg}"

},

"ffmpeg_stack": "v6.0.0"

},

"merged_audio": {

"robot": "/audio/merge",

"preset": "mp3",

"result": true,

"ffmpeg_stack": "v6.0.0",

"use": {

"steps": [

{

"name": "extract_audio",

"as": "audio"

},

{

"name": "tts",

"as": "audio"

}

],

"volume": "sum",

"bundle_steps": true

}

},

...

}

}

Finally, we merge the result of combining the two audio streams with our original video. This will leave us with the following final result:

{

"steps": {

...

"merged_video": {

"robot": "/video/merge",

"preset": "hls-720p",

"ffmpeg_stack": "v6.0.0",

"use": {

"steps": [

{

"name": "merged_audio",

"as": "audio"

},

{

"name": "import_video",

"as": "video"

}

],

"bundle_steps": true

}

}

}

}

Back in our Python program, we create our Assembly like so:

video_url = subtitle_assembly_response.data.get("results").get("encode")[0].get("ssl_url")

merge_assembly = tl.new_assembly({

'template_id': "SECOND_TEMPLATE_ID",

'fields': {

'video': video_url,

'source_language': source_language,

'target_language': target_language,

'ffmpeg': ffmpeg

}

})

merge_assembly.add_file(open('words/text.txt', 'rb'))

merge_assembly_response = merge_assembly.create(retries=5, wait=True)

finished_url = merge_assembly_response.data.get("results").get("merged_video")[0].get("ssl_url")

print("Your result:", finished_url)

Testing

To test our program, I'm using a clip from the BBC series Blue Planet II.

While this may not be entirely ready for primetime yet, the results will constantly improve as the AI models at Google and Amazon are trained on larger and larger datasets. Furthermore, when the AI models are improved, and the upgrades hit Google and Amazon's respective APIs, the improvements will immediately be able to be used by our users. In addition, there are many use cases out there that don't have the budget for having a video profesionally dubbed by actors, while the target audience may still find value in getting a basic understanding of what the content is about. Since the process described in this blog can be fully automated, you could dub thousands of videos with dozens of target languages in just a matter of minutes.

Like all our AI Robots, both the /text/speak and /text/translate Robots are only available to paying customers. If you found this blog interesting, consider upgrading from our free Community Plan to our Startup Plan, which comes in at $49/mo and includes 10GB of encoding data each month.